Written by

Louise Velayo

Published on

Dec 19, 2025

How ICP Measures Node Performance When Nodes Aren’t in a Subnet

Today, roughly 52% of nodes in the ICP network are not assigned to a subnet. These nodes represent spare capacity: powered on, registered, but not actively participating in consensus.

In our previous post, we explained that under performance-based rewards, only nodes assigned to a subnet directly earn rewards.

Which naturally raises a few questions:

What happens to rewards for nodes that aren’t assigned?

Can unassigned nodes still be penalized?

And if so, based on what?

The short answer is: their rewards are extrapolated from the performance of the nodes that are assigned.

In this post, I’ll explain what reward extrapolation is, and how it actually works under the hood.

The Intuition Behind Extrapolation

At a high level, ICP rewards node providers for fleet performance, not just individual machines.

Since unassigned nodes don’t produce blocks, the network can’t measure their performance directly. Instead, it assumes that the performance of a node provider’s assigned nodes is representative of the provider’s operational quality overall.

So every day, the system asks a simple question:

How well did the nodes you actively ran perform today — and what does that imply for the rest of your fleet?

That’s where extrapolation comes in.

How Reward Extrapolation Actually Works

The performance of assigned nodes is extrapolated to unassigned nodes on a daily basis based on the following steps:

Only eligible nodes get rewarded. All and only nodes that are in the ICP registry are elgible for rewards.

Measure real performance. Each day, the network collects trustworthy metrics from nodes that are assigned to a subnet:

blocks successfully proposed

blocks failed

Calculate the daily failure rate: For each assigned node:

Discount systemic issues. Not all failures are the node’s fault. Some are systemic. To account for this, the node’s relative failure rate is calculated by subtracting the subnet’s failure rate (defined as the 75th percentile of daily failure rates across the subnet) from the node’s daily failure rate. This isolates operator-specific performance from broader network conditions.

Extrapolate to unassigned nodes. Now comes the key step. For each node provider, the system:

takes all assigned nodes for that provider

computes the average relative failure rate across them

That average becomes the extrapolated failure rate applied to all unassigned nodes for that provider for that day.

This process runs daily, which makes it robust to:

changing subnet assignments

varying reward window lengths

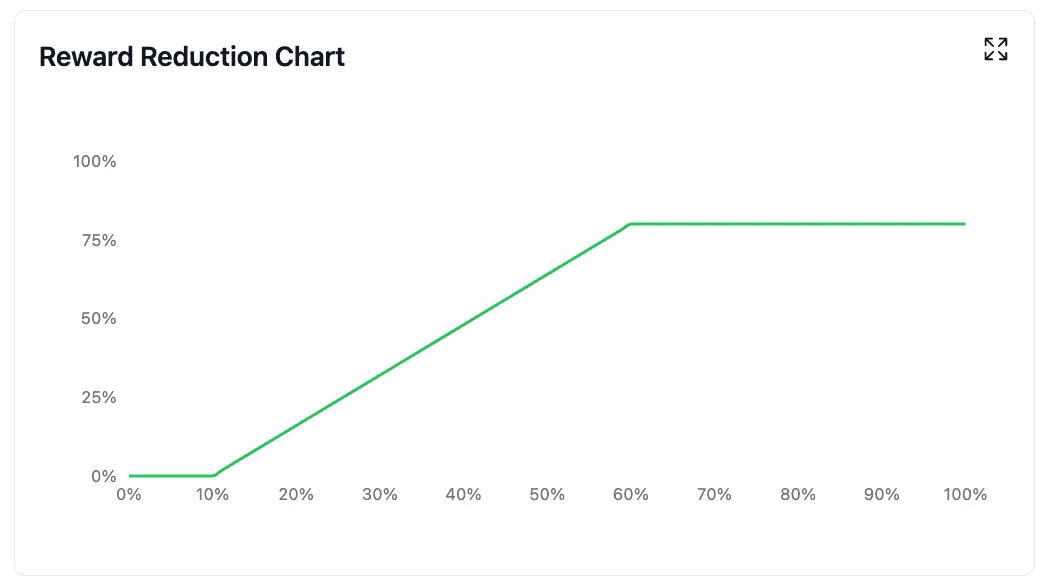

Apply linear reward reduction. Rewards are reduced linearly based on failure rate:

below 0.1 → no reduction

above 0.6 → automatic 80% reduction

For values in between:

Where failure rate can be either:

relative failure rates (assigned nodes)

extrapolated failure rates (unassigned nodes)

Calculate final rewards. Finally:

Let's take a look at this in action.

A Worked Example

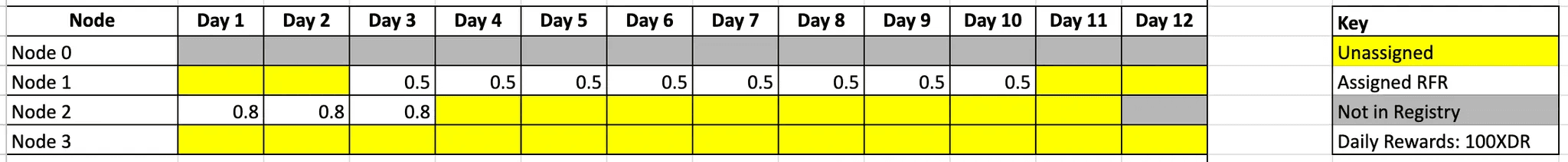

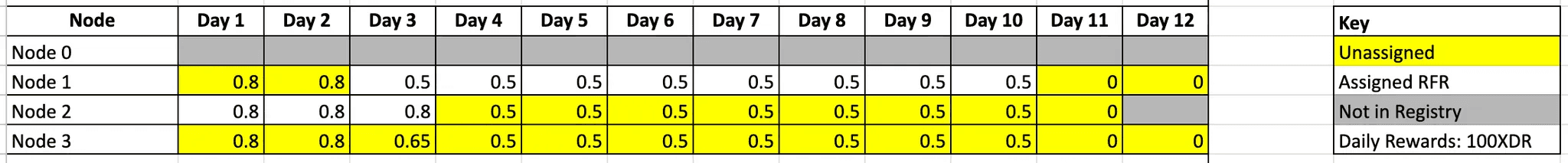

Steps 1 - 4:

Let’s make this concrete.

Assume a node provider has three nodes, each with a base reward of 100 XDR per day, over a 12-day reward period.

During this period:

some nodes are assigned to a subnet

others are not

At this stage, we assume that relative failure rates for assigned nodes have already been calculated (steps 1–4 above).

Step 5:

For each day, we take the average relative failure rate of the assigned nodes and assign that value to all unassigned nodes.

After assigning the calculated average to each of the unassigned nodes, the failure rates of the 3 nodes over the 12 days looks like the following:

Notice that for:

Days 1–2 and 4–10: only one node is assigned

→ no average needs to be calculated. The assigned node’s failure rate is extrapolated to the others

Day 3: two assigned nodes with failure rates of 0.5 and 0.8

→ unassigned nodes receive the average: 0.65

Days 11–12: no nodes assigned

→ failure rate defaults to 0, meaning full rewards

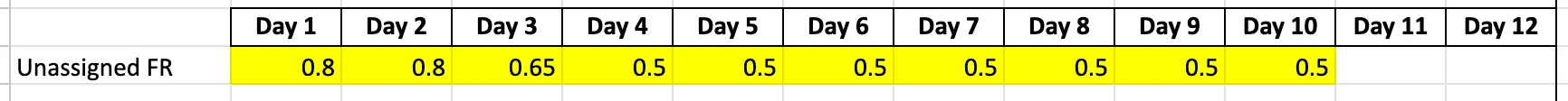

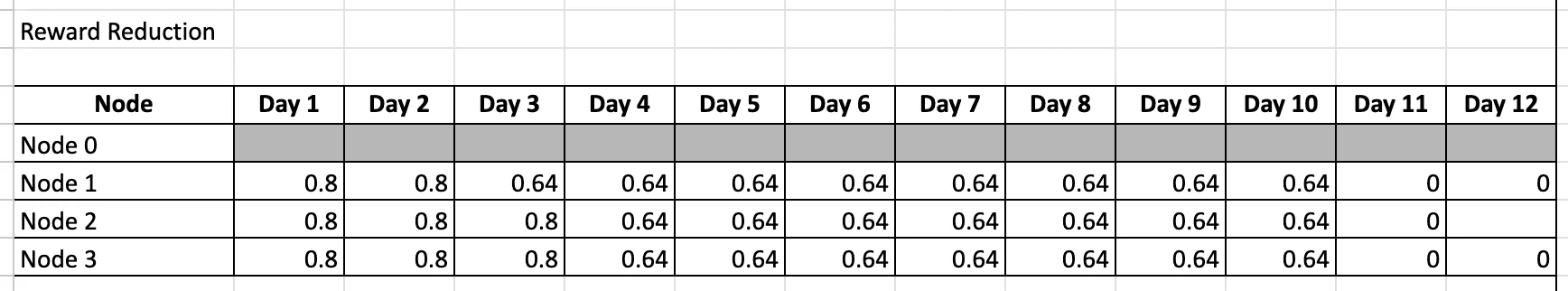

Step 6:

Using the linear formula, we convert failure rates into reward reductions for each node, each day.

After applying this , the reward reduction for the 3 nodes over the 12 days looks like the following:

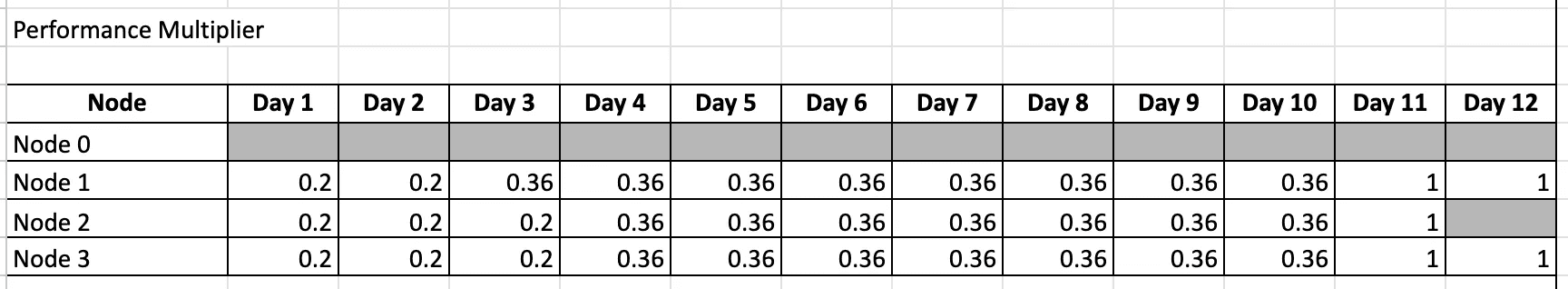

the performance multiplier is then simply 1 - reward reduction :

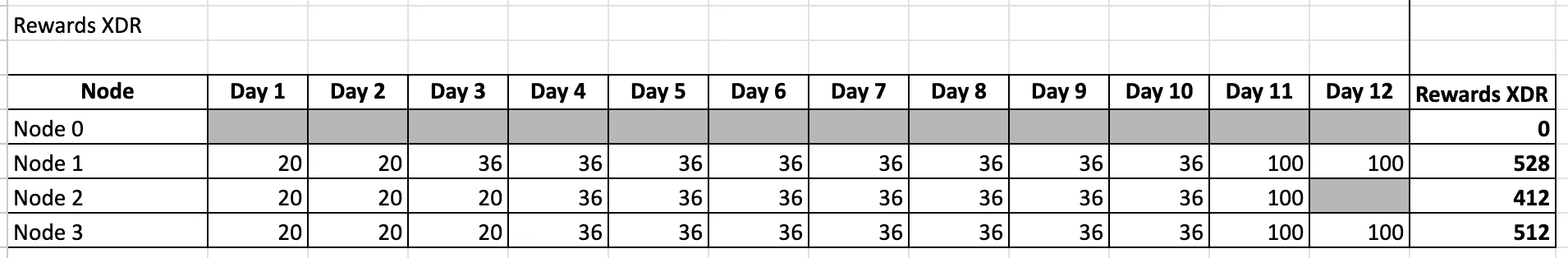

Step 7:

Multiply the performance multiplier by the base reward (100 XDR), and you get the final daily payout per node.

The One Thing Node Providers Should Remember

All of this math boils down to one simple reality:

Your rewards are determined by the nodes you have in a subnet.

Assigned nodes dictate:

the rewards they earn themselves

and the rewards earned by every unassigned node you own

Which means:

If an assigned node goes offline → act fast

If an unassigned node goes offline → it won’t directly affect rewards

Operationally, this makes subnet assignment the critical signal for node providers.

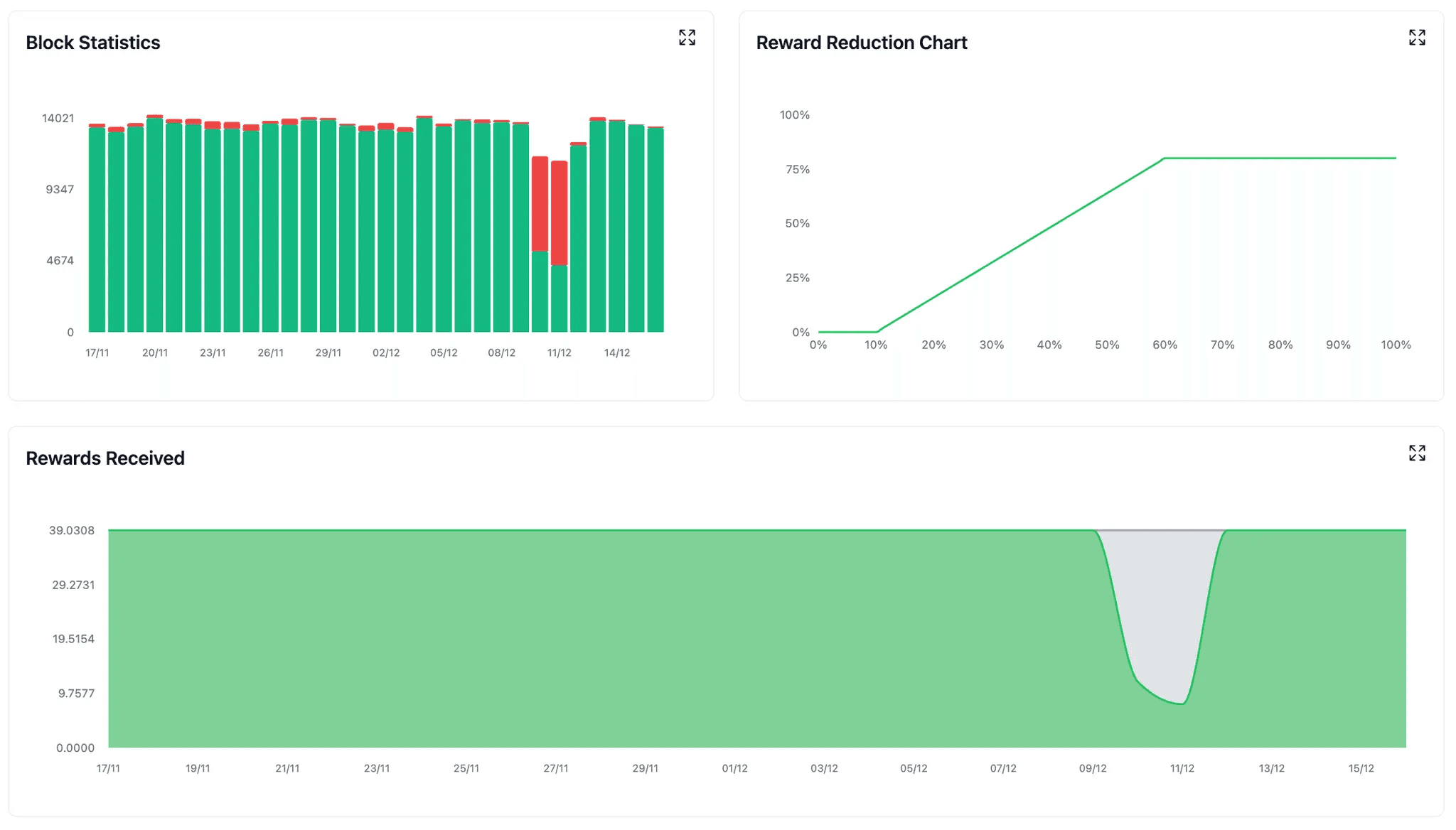

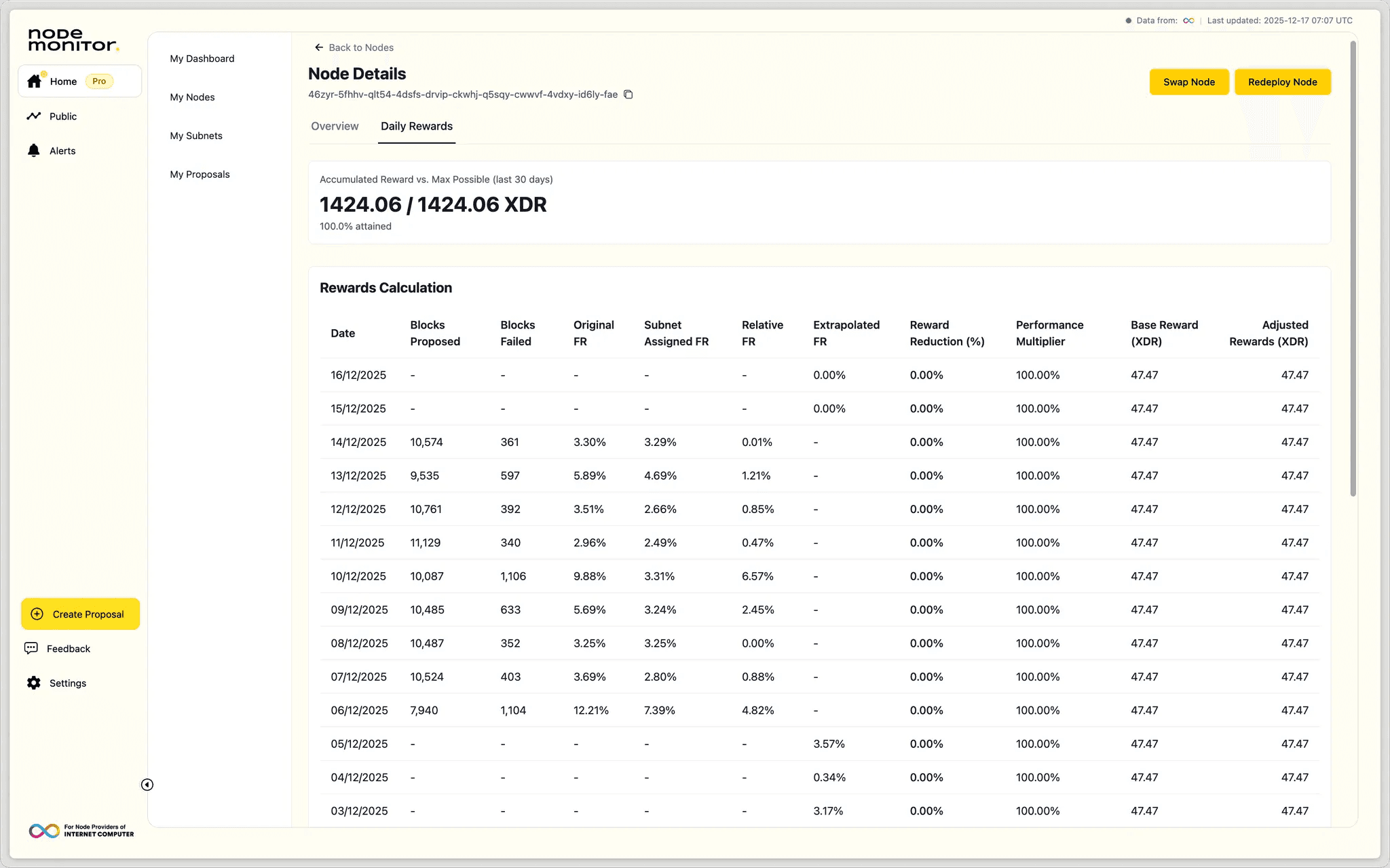

How Node Monitor Makes This Transparent

Node Monitor pulls directly from the node reward canister and surfaces every step of this process in a way that’s more easily understandable.

On our public dashboard, you can inspect reward calculations for any node from any node provider.

For Pro users, we go deeper:

full reward breakdowns per node

clear visibility into whether a node’s rewards are direct or extrapolated

insight into a nodes reward attainment over the past 30 days

No spreadsheets. No guesswork.

Closing Thoughts

Extrapolation exists to fairly reward spare capacity without letting it escape accountability.

Once you understand how it works, one thing becomes obvious: subnet participation is the center of gravity for node rewards.

If you’re a node provider, knowing which of your nodes are assigned, and how they’re performing, isn’t just operational hygiene anymore. It directly shapes your economics, day by day.

That’s exactly the behavior performance-based rewards are designed to encourage.